In Part 1, we created an EC2 instance on AWS, used a cron job to run a bash script which continously exports logs to a file on the ec2 instance, and used another bash script (automated by a cron job) to upload the logs to an s3 bucket. Now, using FluentD let’s get those logs from s3 pulling over into our local Aria Operations for Logs.

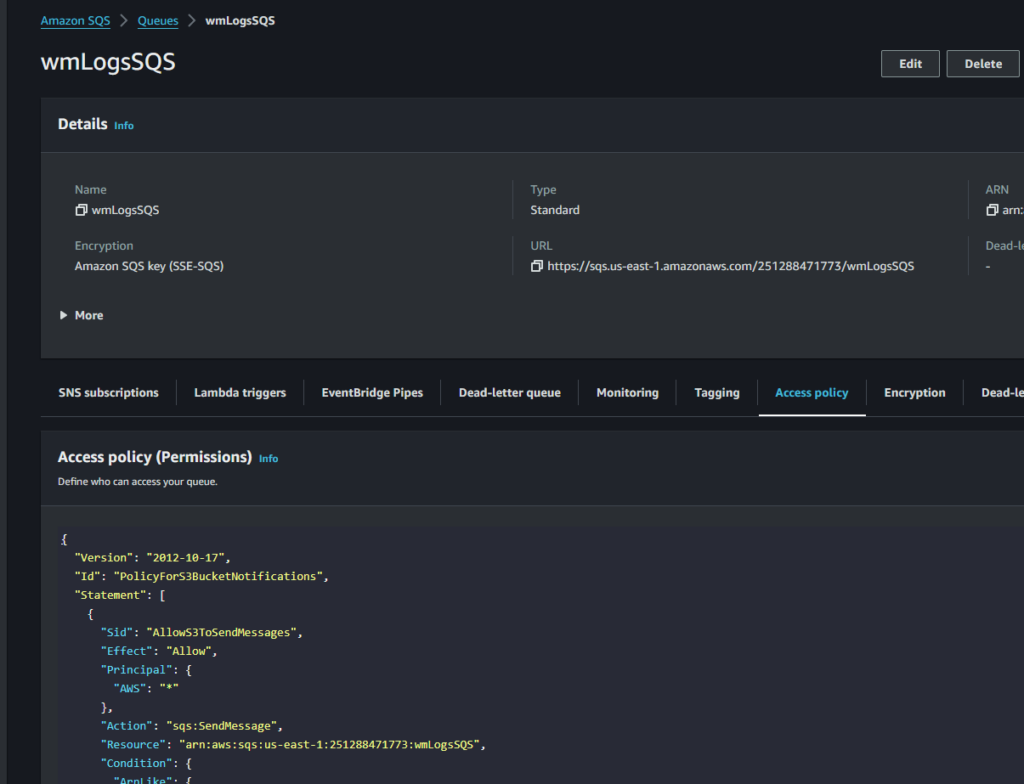

First, we’ll want to create an SQS queue. I’ve called mine wmLogsSQS…and let’s set an access policy using your acct id, tegion, bucket name

{

"Version": "2012-10-17",

"Id": "PolicyForS3BucketNotifications",

"Statement": [

{

"Sid": "AllowS3ToSendMessages",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "sqs:SendMessage",

"Resource": "arn:aws:sqs:us-east-1:251288471773:wmLogsSQS",

"Condition": {

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:::wm-logs-s3"

}

}

}

]

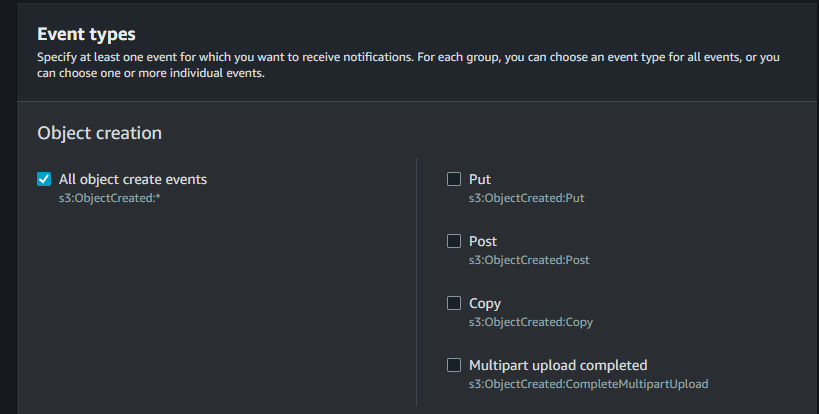

}Then, under Properties on our s3 bucket (wm-logs-s3, we want to create an EVENT, called wmLogsEvents, select All Object Create Events:

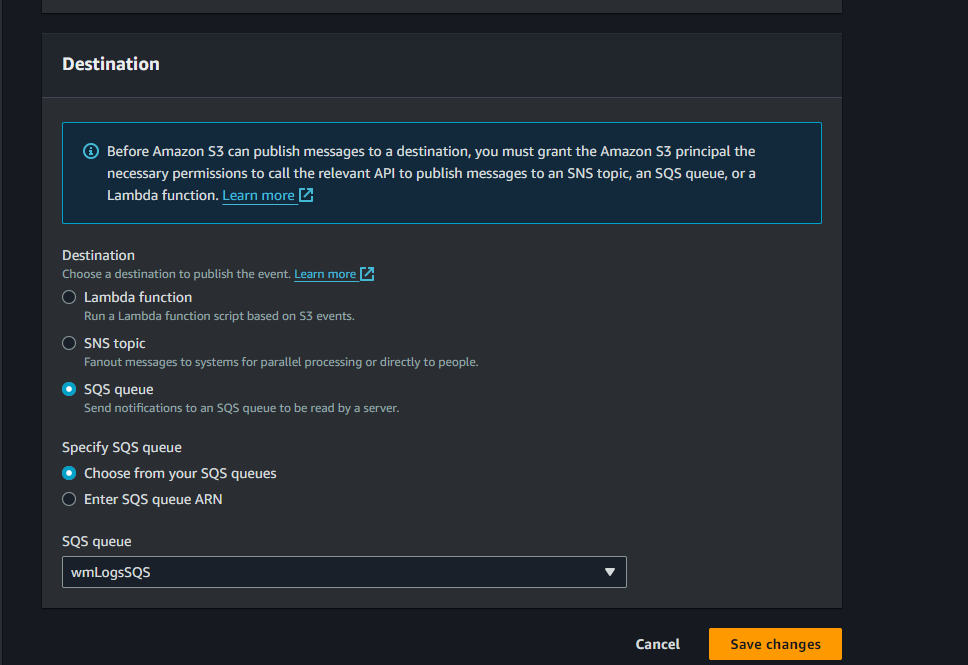

…and assign our wmLogsSQS queue as the destination.

I’ve gone ahead and created a vm in my vCenter…I’ll be using Ubuntu 22.04 on my local vm which will require some updates:

sudo apt update

sudo apt install -y ruby-full build-essential

Then I’ll Install Ruby and dev tools:

sudo apt update

sudo apt install ruby-full build-essential

…and then install FluentD:

sudo gem install fluentd

and let’s get AWS cli on this vm:

sudo apt update

sudo apt install awscli

then let’s log in

aws configure

and test s3 access

aws s3 ls s3://wm-logs-s3

then install fluentd aws sqs plugin:

gem install fluent-plugin-aws-sqsInstall fluentd s3 plugin:

sudo gem install fluent-plugin-s3

then let’s install fluent-plugin for Aria Logs:

sudo gem install fluent-plugin-vmware-loginsight

create a fluentd.service file:

cd /etc/systemd/system

sudo nano fluentd.servicecopy/paste these content:

[Unit]

Description=Fluentd Log Forwarder

After=network.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/bin/fluentd

-c /etc/fluent/fluent.conf

Restart=always

[Install]

WantedBy=multi-user.targetNow create a fluent.conf file:

mkdir -p /etc/fluent

fluentd --setup /etc/fluentCopy/Paste the below into the config using your own aws keys/secrets/s3 bucket name/region and your own host id where your Aria Operations for Logs reside

<source>

@type s3

s3_bucket "wm-logs-s3"

s3_region "us-east-1"

store_as "text"

tag "s3.logs"

add_object_metadata true

match_regexp production_.*

<sqs>

queue_name wmLogsSQS

</sqs>

</source>

<source>

@type sqs

sqs_url "https://sqs.us-east-1.amazonaws.com/251288471773/wmLogsSQS"

region "us-east-1"

tag "sqs.logs"

<assume_role_credentials>

role_arn "arn:aws:iam::251288471773:role/wmLogsRole"

role_session_name "FluentdSQSSession"

</assume_role_credentials>

</source>

<match s3.logs>

@type vmware_loginsight

host "192.168.110.248"

port "9543"

ssl_verify false

<buffer>

timekey 1m

timekey_wait 10s

timekey_use_utc true

</buffer>

</match>

<system>

log_level debug

</system>Let’s test for no errors:

fluentd --dry-run -c /etc/fluent/fluent.conf

Let’s start fluentd

sudo systemctl start fluentd

run fluentd in the background:

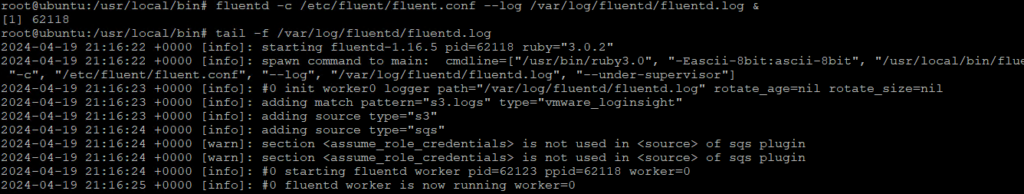

fluentd -c /etc/fluent/fluent.conf --log /var/log/fluentd/fluentd.log &

and check the log is working correctly:

sudo tail -f /var/log/fluentd/fluentd.log

We can see at the top that process 62118 is running. This is our fluentd process. You’ll also see at the bottom that fluentd is running a worker. There’s also some mention about <assume role credentials> not being used…but it’s working nonetheless.

![[root @ the.cloud.architect : ~]](https://www.thecloudarchitect.net/wp-content/uploads/2023/10/cropped-wm.logo_-1.png)

+ There are no comments

Add yours