Aria Operations for Logs has a large marketplace of content packs designed to import logs from many types of 3rd party applications..but collecting logs into Aria Operations On Prem from native public clouds is not so fortunate, yet anyways. To hold us over till a native solution surfaces, there are workarounds and I’ll detail one below which relies on bash scripts, cron jobs, and fluentd to get the job accomplished. My example will highlight working with an AWS EC2 instance but this logic could be repurposed for forwarding logs to Aria Operations On Prem from any native public cloud provider.

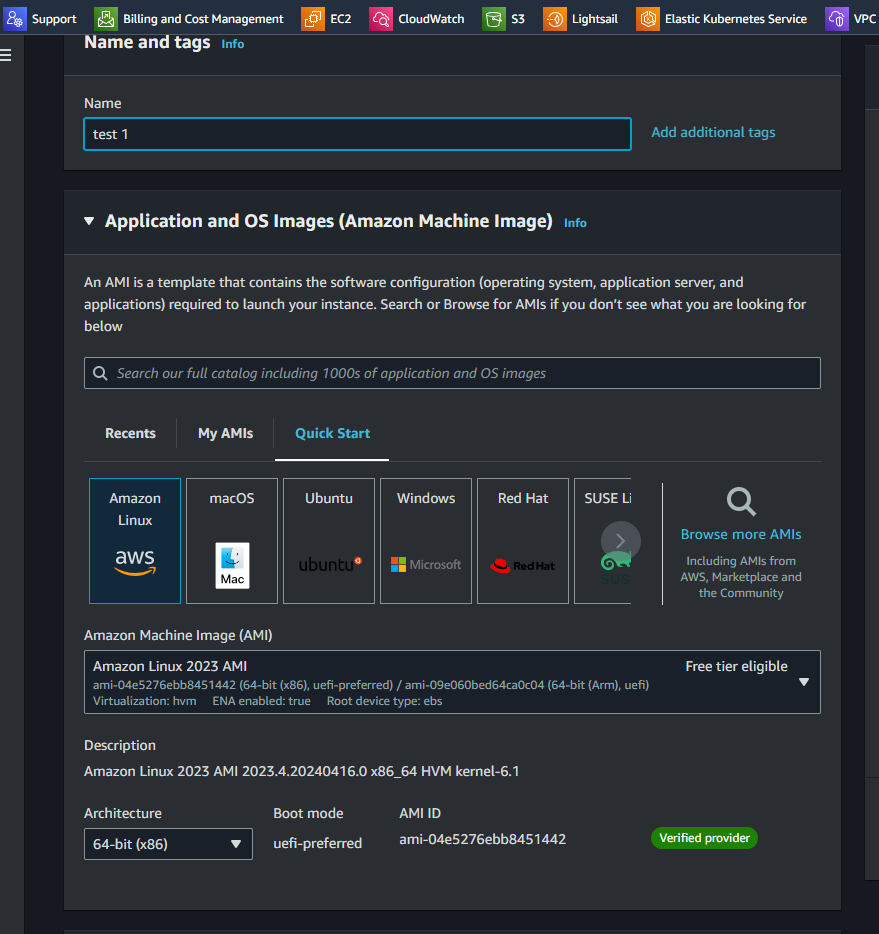

Let’s get started with creating our ec-2 instance, and I’m going to use the AWS Linux OS.

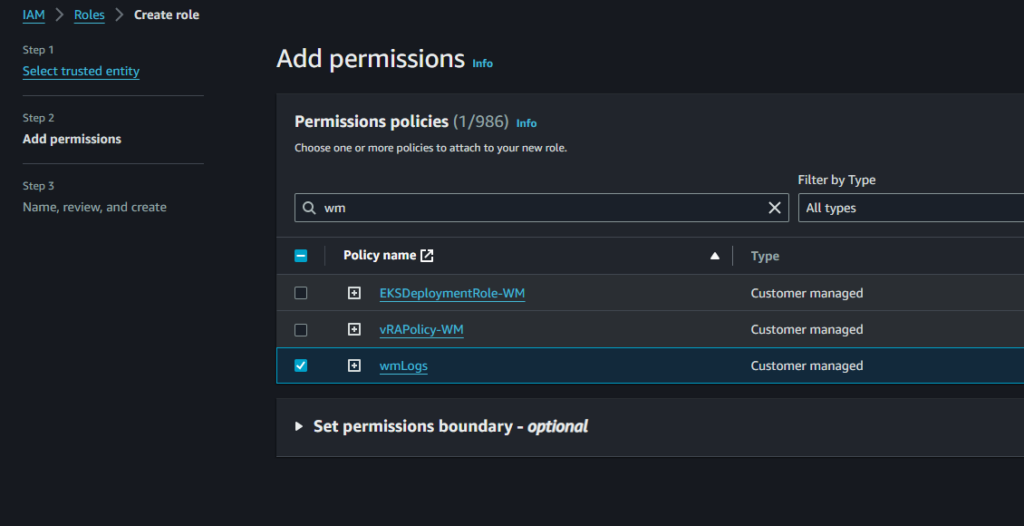

We’re going to need to create an IAM Role which references a POLICY that allows all s3 actions. You can always reduce the permissions later (practicing the principle of least privelege) but I’ve found there’s enough potential roadblocks in testing a new solution that you don’t need or want to have a policy limitation creating unneccessary errors…you’ll have enough errors to troubleshoot without strict policy.

I’ve created a role called wmLogs…

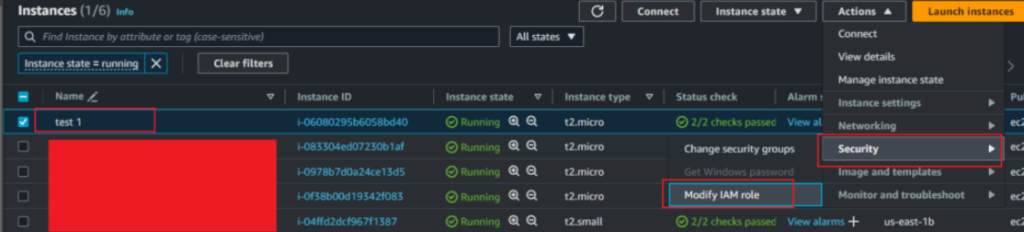

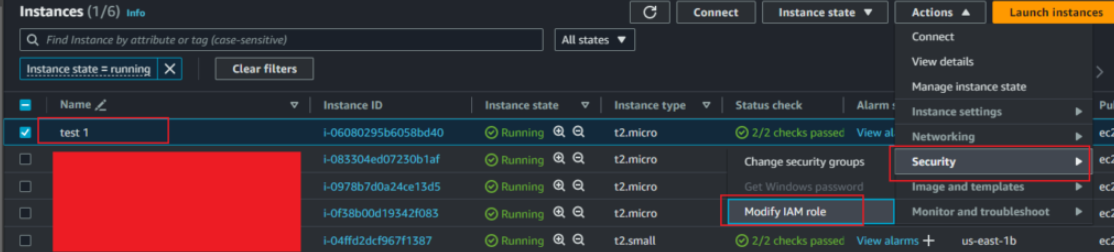

and in the Security menu of our EC2 instances, I’ll select Modify IAM Role…

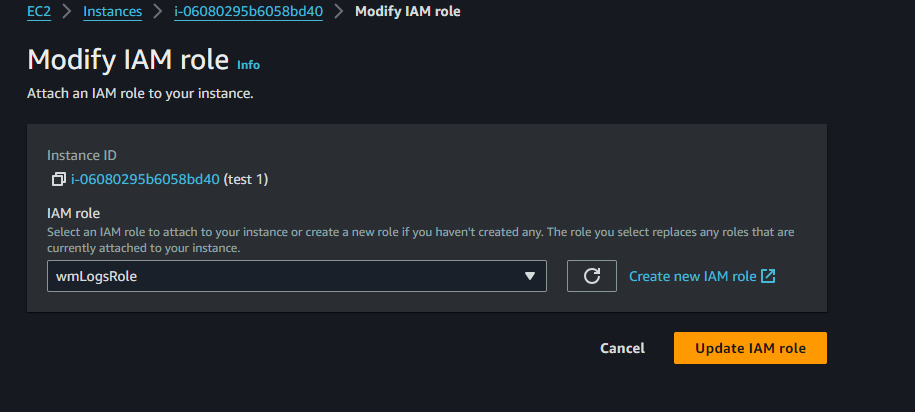

where I’ll assign wmLogsRole to my ec2 instance

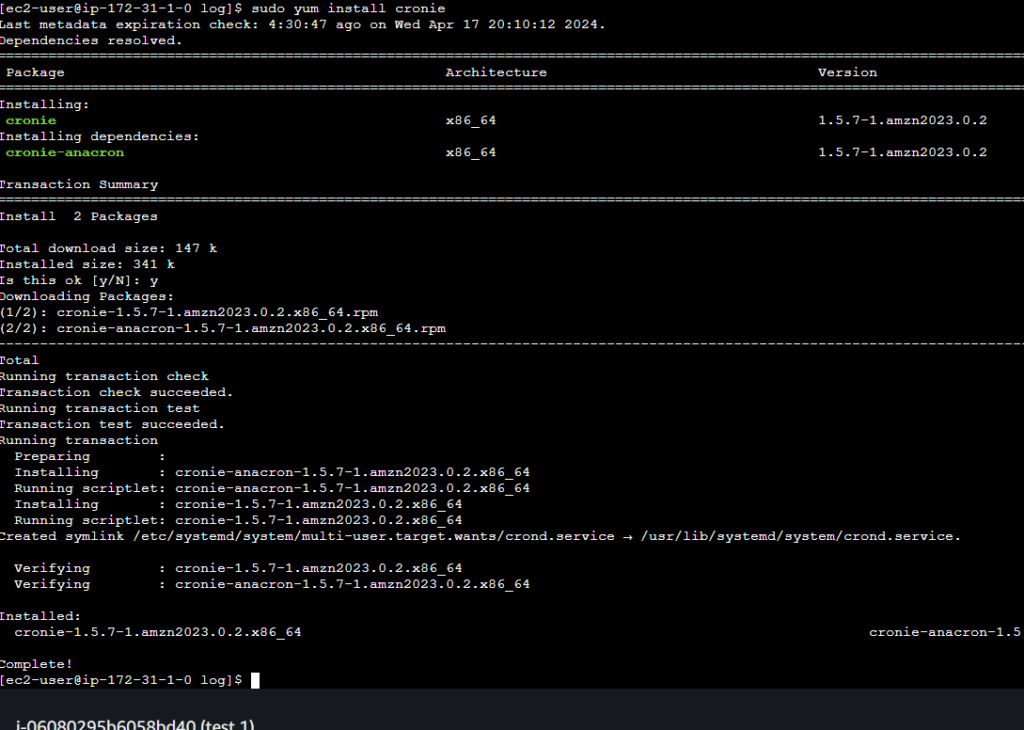

Inside the ec2 instance, we’ll need to run cron jobs so:

sudo yum install cronie

Now we need to create a bash script called log-journal.sh which continuosly exports logs to /var/log/journal_export.log…Copy the below contents into a new file named log-journal.sh:

#!/bin/bash

# Script to continuously export journal logs to a file

# Ensure the log file exists or create it

touch /var/log/journal_export.log

# Set permissions to make it readable (optional)

chmod 644 /var/log/journal_export.log

# Start exporting logs

journalctl -f | tee -a /var/log/journal_export.logThen we’ll create a file (journal-export.service) which runs the bash script (log-journal.sh) from the previous step:

sudo nano /etc/systemd/system/journal-export.servicecopy/paste the contents below into the file and save:

[Unit]

Description=Export journalctl to log file

After=network.target

[Service]

Type=simple

ExecStart=/usr/local/bin/log-journal.sh

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.targetLet’s reload, enable, and start systemd:

sudo systemctl daemon-reload

sudo systemctl enable journal-export.service

sudo systemctl start journal-export.service…and verify the service is active and running:

sudo systemctl status journal-export.serviceThen, let’s monitor the log file to see it rain log entries in front of your eyes:

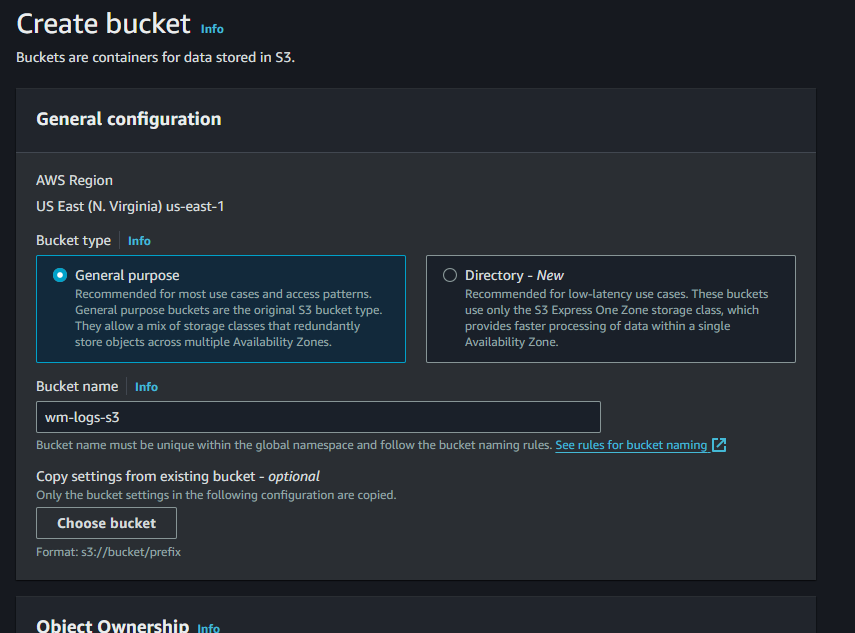

sudo tail -f /var/log/journal_export.logNow let’s create our s3 bucket: wm-logs-s3 (or whatever you choose):

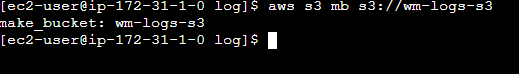

back in our cli let’s select our bucket (Make Bucket) that we just created:

aws s3 mb s3://wm-logs-s3

Let’s create a file (script) to handle the uploading of logs to the new s3 bucket:

sudo nano /usr/local/bin/upload_logs_to_s3.shcopy/paste the below code into the file (notice where I’ve indicated my bucket name in bold):

#!/bin/bash

# Timestamp for file naming

TIMESTAMP=$(date +"%Y%m%d%H%M%S")

LOG_FILE="/var/log/journal_export.log"

S3_BUCKET="s3://wm-logs-s3"

# Compress the log file

tar -czPf "/tmp/${TIMESTAMP}_journal_export.tar.gz" $LOG_FILE

# Upload to S3

aws s3 cp "/tmp/${TIMESTAMP}_journal_export.tar.gz" "${S3_BUCKET}/${TIMESTAMP}_journal_export.tar.gz"

# Truncate the log file

cat /dev/null > $LOG_FILEand make the script executable:

sudo chmod +x /usr/local/bin/upload_logs_to_s3.shLet’s run a cron job now to schedule the running of the script every minute

sudo crontab -eAfter the crontab opens, enter:

* * * * * /usr/local/bin/upload_logs_to_s3.shSave and quit. crontab opened in vim for me so it’s:

:wqmight be ctrl-x or ctrl-o depending on what editor opens crontab for you.

let’s gain root access:

sudo -iand change the permissions

chmod +x /usr/local/bin/upload_logs_to_s3.sh

and let’s test the script is executable

chmod +x /usr/local/bin/upload_logs_to_s3.sh

/usr/local/bin/upload_logs_to_s3.sh

I get this message

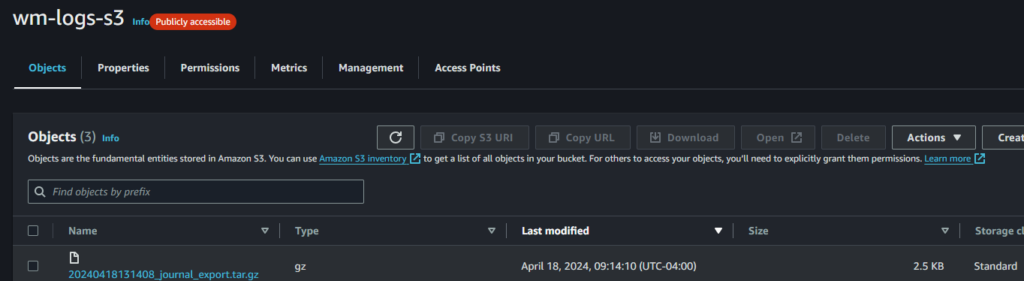

[root@ip-172-31-1-0 ~]# chmod +x /usr/local/bin/upload_logs_to_s3.sh

/usr/local/bin/upload_logs_to_s3.sh

Creating tar file from /var/log/journal_export.log

Tar file created successfully.

Uploading tar file to s3://wm-logs-s3

upload: ../tmp/20240418131408_journal_export.tar.gz to s3://wm-logs-s3/20240418131408_journal_export.tar.gz

File uploaded successfully.

Truncating log file

Log file truncated successfully.And if I check my wm-logs-s3 back on the AWS Console, I see the uploaded journal_export:

Now we need to get FluentD installed and forwarding the logs from our s3 bucket over to our Aria Operations for Logs instance. See you in part 2.

![[root @ the.cloud.architect : ~]](https://www.thecloudarchitect.net/wp-content/uploads/2023/10/cropped-wm.logo_-1.png)

+ There are no comments

Add yours